1. Story

- There are many cases in which we need to initialize an SQL database at application startup.

- Either creating schema based on DDL scripts or feeding initializing data into database by DML scripts may be required in application startup.

- Spring Boot provides simple features for developers to run both DDL and DML scripts automatically.

- In this post we use this feature practically.

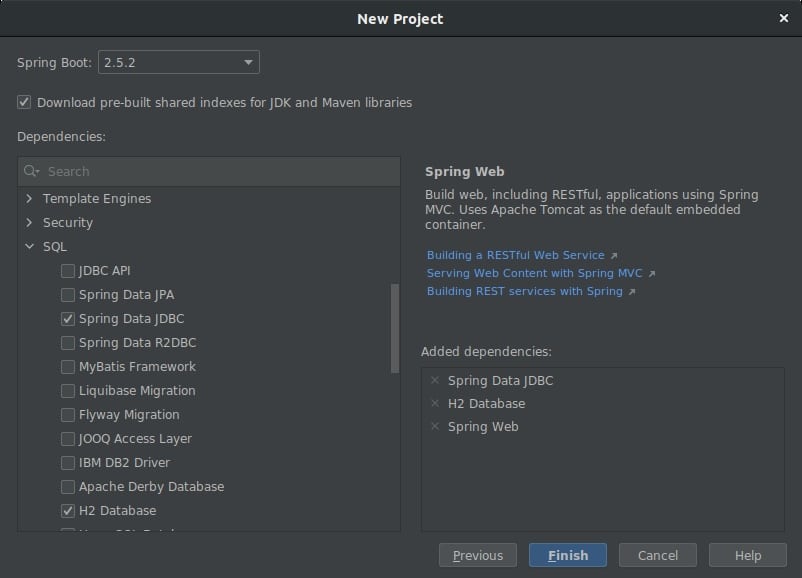

2. Create Project

You can find the complete project at Github.

Create a new project by using online Spring initializer either at start.spring.io or on Intellij _Idea > New Project > Spring Initializr.

And in dependencies window select 3 dependencies that we named them in "dependencies section" of this post.

3. Dependencies

The only needed dependency that do the job for running SQL scripts automatically is spring-boot-starter-data-jdbc. But we also want to use h2database as a simple embeded database. And at last we use spring-boot-starter-web to make our project a simple running project, so our dependencies in pom.xml file looks like this:|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

<!-- dependencies in pom.xml --> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-data-jdbc</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>com.h2database</groupId> <artifactId>h2</artifactId> <scope>runtime</scope> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> </dependency> </dependencies> <!-- end of dependencies--> |

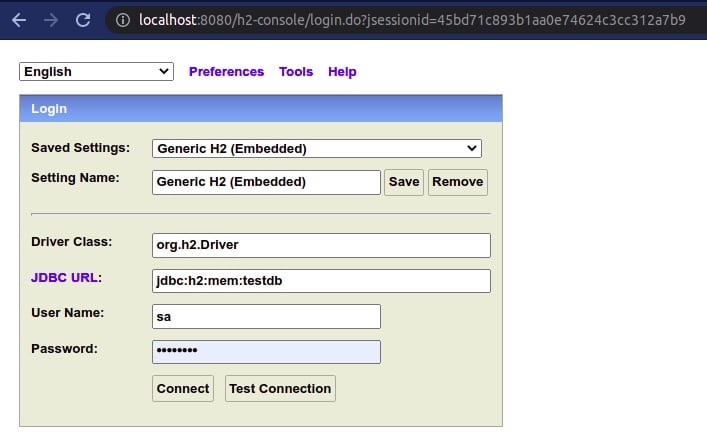

4. Configure H2 Database

Because of its simplicity we choose embedded h2 as our SQL databse. So no need to have a SQL database like MySQL or Oracle to be installed on your environment.

H2 is a lightweight SQL database written by Java which can also be embedded in a Java application(in memory).

And now as you can guess is the time for telling Spring_data_jdbc how to connect to our database instance of h2. We tell these things as some configuration statements. So let's open "application.properties" file in "resources" folder and fill it like this one:

|

1 2 3 4 5 6 7 8 9 |

# run h2 console spring.h2.console.enabled=true # datasource info for h2 spring.datasource.url=jdbc:h2:mem:testdb spring.datasource.driverClassName=org.h2.Driver spring.datasource.username=sa spring.datasource.password=password spring.jpa.database-platform=org.hibernate.dialect.H2Dialect |

Please note that we want to have a GUI from h2 so we added "spring.h2.console.enabled=true" in the properties file. And by this setting by running the application, we also will have an h2 console on our web-browser which we talk about it in following sections of this post.

Other properties are about addressing our desired h2 database. These settings seems to be much clear and self descriptive.

5. DDL Scripts

In SQL, DDL stands for Data Definition Language. DDL statements create, modify, and remove database objects such as tables and indexes.

We add a file named schema.sql to the resource folder to be loaded to classpath. This file should contains all the DDL scripts for our desired database schema.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

-- create a table for customers create table Customers ( id BIGINT(20) NOT NULL AUTO_INCREMENT, name VARCHAR(100) NOT NULL, family VARCHAR(100), active BOOLEAN, PRIMARY KEY (id) ); -- alter a table just as a sample ALTER TABLE Customers ADD description varchar(200) AFTER family; |

6. DML Scripts

In SQL, DML stands for Data Manipulation Language. DML statementa used for adding (inserting), deleting, and modifying (updating) data in a database.

In this step we create a file named "data.sql" in "resource" folder to be added into the classpath and fill it with our desired DML statements:

|

1 2 3 4 5 |

-- DML statements INSERT INTO Customers (id, name) VALUES (12, 'alex'); INSERT INTO Customers (name, family, description) VALUES ('amir', 'kesha', 'inserted in initialization'); |

There are two insert command in our data.sql file and of course you can add any other commands for INSERT, UPDATE, and DELETE data.

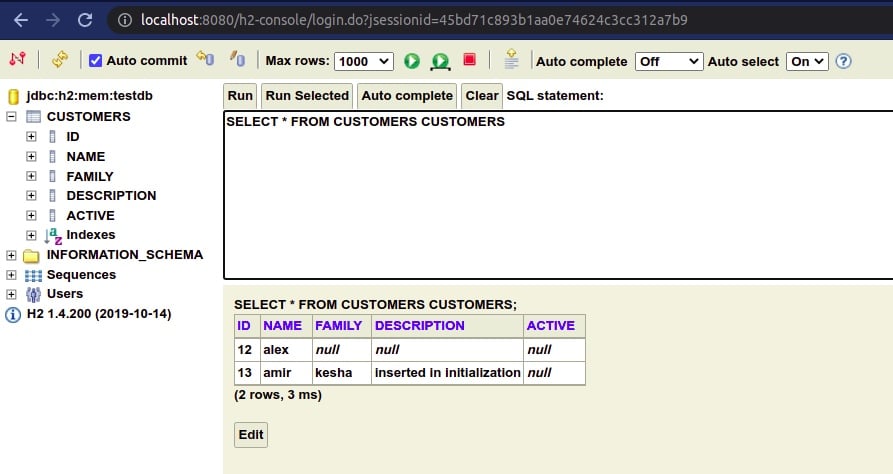

7. See Results in Console

Hey congrats, that's all, now you can run your application and if there is no bugs you would get a message like "Started DatabaseInitializationApplication in 1.828 seconds (JVM running for 2.548)" in your console/terminal.

So good. Let's open a browse and go to http://localhost:8080/h2-console. Login with the credentials that you declared in application.properties file.

And at last you can find your automatically generated table(s) with its inserted data in the h2 console just like below image.

8. Multi Datasources

If you need more than one datasource in your application, then you must determine one of them as the primary one, so Spring-data-jdbc can do things on this primary datasource.

If you do not determine the primary datasource then while starting the application h2console and also Spring-data-jdbc could not find a primary datasource and return error message. So we add second datasource properties in the application.properties file and also we define two beans for primary and second datasource and determine one of them with @Primary.

9. Further Reading

Spring Boot can also supports Higher-level Migration Tools, like Flyway or Liquibase for doing database migrations at startup. This topic is out of the context of this post and you can read more about that in Spring Documents.

Thanks for Reading. Good lock.